- Understand what the MCP is and what Gemini CLI can do

- Apply prompts to read, analyze, and modify files using the Gemini agent

- Create and push content to GitHub with the help of the AI agent

1 What is MCP?

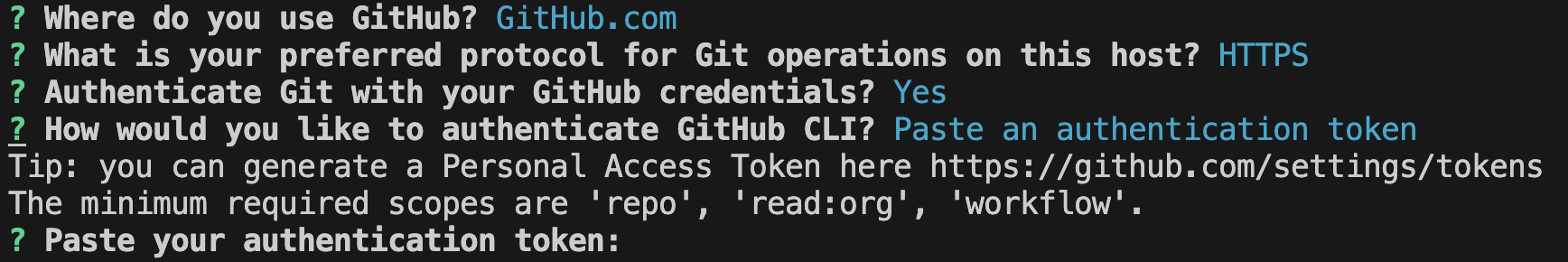

MCP (Model Context Protocol) is a standard for connecting AI models (like Gemini) with the outside world. It provides a safe and consistent way for AI agents to access:

- Local files on your computer

- External tools (like GitHub, Google Workspace, Slack)

- APIs or data services

Think of MCP as a bridge: It defines how an AI model communicates with tools and resources so that interactions are reliable and reproducible.

Image credit: Descope

Image credit: Descope

- Ensures structured communication between AI and external systems

- Improves safety by controlling what the model can and cannot access

- Makes it easier to integrate AI into workflows like coding, research, and publishing

2 From MCP to Gemini CLI

Now that you understand what MCP (Model Context Protocol) is, let’s see how it works in practice in addition to google workspace.

The Gemini CLI is one of the first practical tools that implements MCP. It allows you to:

- Use Google’s Gemini AI models directly in your terminal

- Access your local files

- Asking questions about code or data summary

- Generating code snippets or documentation

- Editing or refactoring code via prompt

- GitHub integration: cloning, committing, pushing, issuing

🔑 Authentication

- When you first launch Gemini CLI, you’ll be redirected to a webpage for authentication.

- Free tier limits: 60 requests/min and 1,000 requests/day. If you exceed this, generate a Gemini API key for more allowance.

- Running Gemini CLI inside VS Code? You will be prompted to authorize VS Code to connect with Gemini CLI.

🌐 GitHub Integration

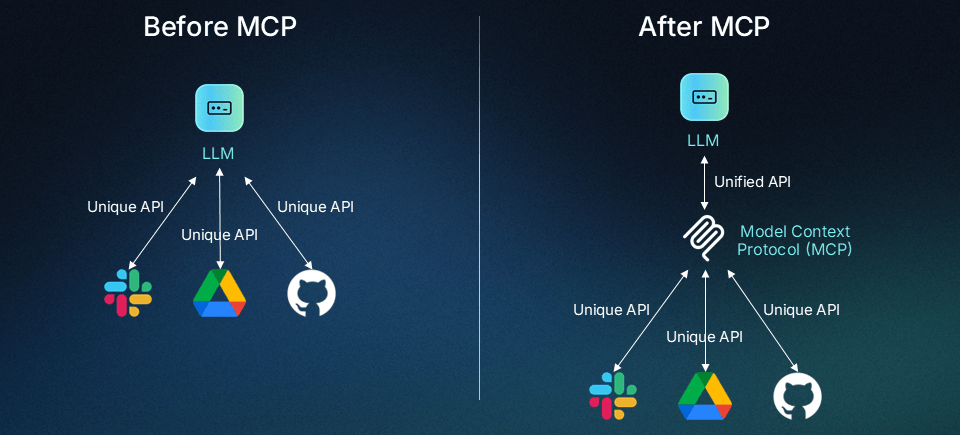

- If GitHub CLI (

gh) is not installed, Gemini CLI will prompt you to install it. It is recommended to install it outside of Gemini CLI terminal.

- The first run requires authenticating with your GitHub account. Example GitHub authentication screen below. The authentication token is obtained: GitHub settings → Developer settings → Personal access tokens

✅ Verifying Changes

- The tracked changes can be viewed in VS Code (Gemini CLI Companion extension) - or GitHub Desktop.

- Or, in the terminal, run

git diffto see what changes were made.

3 CLI Tools Comparison

AI command-line tools give researchers different ways to bring AI into their workflows.

Here is a comparison of some of the most widely used ones:

| Tool | Hosted vs Local | Best Use Cases |

|---|---|---|

| Gemini CLI | Cloud (Google) | Best for individual experimentation because a substantial free tier, and it is lightweight |

| Codex CLI | Cloud (OpenAI) | Ideal for coding tasks (GPT-5), debugging, and automation |

| Claude Code | Cloud (Anthropic) | Best for handling large documents, summarization, and long-context reasoning |

| Ollama | Local (runs on your machine) | Perfect for privacy-first workflows, running offline models, and experimenting with open-source LLMs |

4 Key Risks and Mitigation Strategies for Gemini CLI

Gemini CLI and GitHub CLI are powerful — but with great power comes the responsibility to secure credentials, minimize permissions, and update regularly.

| 🔑 Risk | ✅ Mitigation |

|---|---|

| Credential Exposure | Use fine-grained tokens, avoid sharing on multi-user systems |

| Accidental Actions | double-check commands, avoid risky scripts |

| Too Many Permissions | Apply least privilege, audit tokens regularly |

| Updates | Keep CLI tools up to date |

| Data Privacy | Avoid sharing sensitive data, use local models for sensitive tasks |